00:00

All right, let's kick this off. Thank you. I appreciate the time today. Um My name is Brian Cadell. I'm a cloud solution architect at Intel and I'm joined by Mr Josh Hillier, principal engineer, senior director of cloud tools. And today we're gonna be talking about cloud management and tools and I just wanna give you

00:20

some awareness around what Intel is doing in the field. So as we think about the enterprise, it 2.5, approximately $2.5 trillion over the course of one year will be used for technology, software and services to support their business objectives. And so our role at Intel and our success depends on understanding those and the ability to understand and those objectives and

00:46

articulate and demonstrate how we can help our partners achieve theirs. And also according to I DC, 55% of all investments in the information and communication technology will be focused on digital transformation initiatives by 2024. So as we look at this, you agree, we're kind of past the cloud adoption phase and we're in the cloud uh basically the cloud maturity phase in terms of um where everyone is heading and

01:15

they're demanding more from the cloud. So as we think about that, um we think about infrastructure tools, technologies and ways to optimize and enable that the digital transformation and cloud application are creating an unprecedented opportunity and moving at a toward pace. 70% of organizations stated that they have accelerated their cloud migration in the past

01:38

year, this explosion in data. And according to I DC reports that 67 zeta bytes of new data was created by 2020 is projected to rise to 180 zeta bytes and replicated by 2025 together with this trend. And basically the trend in architecture and shifting these applications associated with data are continuously evolving.

02:04

And so this is a glimpse of the world basically runs on Intel in terms of cloud and I wanted to include the breadth of this. This is not everyone. So we've listed kind of the top three C SPS, Aws, Azure and GCP. And we also wanted to also include the business obviously from an OEM and isb perspective that we've been doing for a long time. And so Intel is everywhere it's pervasive

02:28

throughout all the business verticals. And so I just wanted to give you that quick glimpse also from a cloud efficiency and performance um optimized workloads for all environments. So we have built in accelerators to help drive those workload performance specific to that workload, that offload some of the CPU cycles for other things from a sustainability

02:52

perspective. And we made an acquisition of a company called Granulate about a year, a little over a year ago. This basically helps you do more with less when you've already exhausted a lot of your traditional reserved instances, other savings plans and you're trying to get more out of your compute. And from an artificial intelligence perspective,

03:11

there are many ways we drive these outcomes with built in a accelerators and also other portfolio products including Habana F PGA and software that connects to the silicon and last security. And we help drive this through confidential computing with technologies like SGX and TDX. And last, this is the breadth of what Intel is doing.

03:36

So when we think of things from five G to autonomous driving Intel is there and driving that portfolio. And so I just wanted to kind of give you this glimpse when we think of product enablement, we're driving, we're driving cost, we're driving performance. Um And, and obviously making those generational improvements across all of these across all of

03:57

these different different business verticals. And so as we do this, I wanna turn this over to Josh to cover the rest of this good deal. Thanks Brian. How are you guys doing? Very good? OK, sweet. I see. I had the same problem, right?

04:14

So um like I said, Josh, principal engineer, um I run our cloud um cloud native tools and solutions group in our DC A I data center A I technical office. That's where I come from. Um Yeah, still get feedback. Oh I'm sorry. That's, that's me a little bit. OK. I'll go over there.

04:40

Wait, wait, hotter, getting hotter. Getting no. All right, cool. So I'm gonna spend a couple of moments talking about um the fourth generation process. So why are we here? Is anybody asking that? Why Intel is here at Pr maybe, maybe not? OK. Well, if you're thinking about that,

04:58

he's going to answer that in a moment. OK. Um But I'm gonna talk a little about what is our fourth generation processor. Um Brian talk a little bit about accelerators and I'm gonna do that one thing that's interesting about Intel is yeah, we've been around a long time, right? Got it.

05:12

But as I was reminded just a few moments ago, we get lost a lot of times when the new shiny object or new shiny OEM or new shiny thing comes out. And so and what's important for me and from what I express to you guys is that we are playing in a lot of zones and we are very relevant on how we're looking at workloads, how we're looking at optimizations and how we're producing kind of the real business

05:38

outcomes. Like what really is happening? What does it mean when I have better cost efficiency? What does it mean when I have better optimization and performance? So let me dig in here's fourth gen kind of a couple of key points about this that I believe are important. Um One is, and I'm going to start with the very

05:55

end here is telemetry for those that you mean, I know I'm a principal engineer. My background is in telemetry. So if you want to talk PM us or you want to talk counters, that is what I spend my weekends doing when I'm not out on the lake is thinking about how it really works. We have built in advanced telemetry that's

06:15

doing better for looking at what's happening from an electricity standpoint and understand like the, the what what does it mean from a consumption? And how do we throttle it up above? We have optimized power mode. So this gives us the ability to have less power but not take so much away from performance because how it works in ac pu is when you drop performance down,

06:37

we go into what's called power save mode, it will drop the performance of the processor down. So in our, in our CS P world, when you go into a cloud service provider, where do you think they take our processors to mostly power safe? Why? Because they have data centers and if you run a data center, you want less power as possible, what we can do is you can actually flip those

06:56

to performance mode and the CS P again doesn't like it, but you can and it's allowed for you to do that. Um But we've got, now we're starting to figure out. OK. How do I keep performance up, be able to shift the power envelope down on the right is we start digging into those accelerators.

07:12

We have a full menu of accelerators. It would take me about three hours to walk through each one and their architectures and what they do. Um The short message is that if, if you're doing a A I workload, you're doing an analytics, workload, a compute workload, a security workload. We've got an accelerator for you.

07:33

We've got things like am Xaia A, you know, TDX, which I'll talk a little bit about in a moment. Um Sgxqat, we got crypto and I, I mean, there's all this good stuff that you go. Well, OK. That's kind of cool. But what is it used for? Well, an example is, have you ever heard of Engine X?

07:51

Have you heard of Gen X? Maybe a couple? OK. Web server, right? Pretty rudimentary, right? We love in the, in the micro services where we love to shut those suck, you know, bring it up, shut them down, use it, abuse it, leave it. Um when you use engine with crypto and I with

08:07

Qat you get a double digit performance out of it. So OK. Well, that's what does that mean? Well, that means you're gonna get your menu in Netflix faster. That means you're gonna get recommendations in Amazon quicker where it says here's some products you're interested in. Those are the kind of things that start to

08:25

really feed into. Oh, ok. I can offer services faster and you can see some of it here and I'll take it a little bit more. Um, now one thing that, um, you know, and I'm gonna speak very, very, very direct here is that there's been a lot of announcements the last couple of weeks around A I has ever seen them maybe.

08:44

Um every customer conversation I've been in the last month, they've asked me one thing. What is your position on gen A I chat GP T, what are you doing for? What are you doing in this space? What is Intel doing? And you know, and are you guys even doing A I announcement came out about a week ago saying this other company is the A I provider and,

09:08

and I didn't want to raise my hand and say, hey, we're doing that too, but I kind of had the moment of, well, we're, we're actually been in A I A while. There are some workloads that we're better at and some we're getting better at. So let me show a couple up here. So whether it's conversational A I, you see on the left with the or A,

09:25

you see with the Noco Loco A I platform, um We are performing better now how we do a lot of the comparison you see here is uh it's a combination when we do benchmarking. So there and has ever heard of the word benchmarking, understand kind of how that works. OK. So when we do benchmarking, we look at a couple of categories, we do gen on gen gen versus comp and non optimized versus

09:51

optimized. Those are our three main zones that we benchmark. You'll notice in some of these it is we're going against comp on the second one. And we're saying what, how is our comparison against like our competitor and some of these it's gen on gen you'll notice like on the first and the fourth where it's faster gen on.

10:08

So we're just comparing against ourselves on saying, hey, look when we are at um Ice Lake and I'm comparing it against Sapphire Rapids. So our third generation Zon scalable processor versus our fourth Gen Xon scalable processor. And if everybody in marketing was here, hopefully I hit that correct. I felt like I did. Yes. So um but when we go from Ice like Sapphire

10:28

Rapids, we're seeing that type of improvement. So we were playing heavily. Um And I would just, you know, I would say, hey, if you're interested in A I, please take another double click in to the platforms we have for A I and how they work. Let's continue on. Let's talk a little bit about security. Does anybody care about security in here?

10:47

Nobody? No, I mean, we don't do anything secure. What do our kids? I don't care. Um, so, um, let's look at the options here. Um, what's really, this is kind of funny. We had a, um, a customer event, um, a month ago in Orlando and Pat singer.

11:05

Is there, Pat Pat is our CEO. Um, he was at Intel then went to VM Ware or a MC, they went to VM Ware and came back and he's our CEO, he, um, was on stage and, um, he goes, let's talk about security. He had an undershirt, he busted out and it said trust no one or zero, get it like the thing, right?

11:25

But it, it totally was like to me spot on and like where's your trust at? What do you have to do? So what the, what the yellow kind of the yellow and black highlight is showing what you have to trust? Ok. So in the top example, when you're not using confidence computing that Brian was talking about uh when you're not using that,

11:43

what do you have to trust? You have to trust everything. I gotta trust the S OS. I got to trust the admins. I gotta trust the bios that hasn't been compromised. I gotta make sure that the operating system, any of the packages I'm using all that has to

11:57

be in check. Well, what we, what we're we've provided to the market and you can see this will stare step down is we go to things like total memory encryption that brings my trust window. So I have a couple of things I don't have to trust anymore. They're already non trusted.

12:13

And as you continue on the stack, this is brand new, just came out with our fourth gen which is the TDX. What is TDX? It basically is taking a VM ware container as an example, a basically a VM an image and it's protecting that image.

12:33

OK. So instead of saying do I have to protect, you know, what zone am I talking about? I have to protect. No, you're just doing the guest VM. So in the cloud space or in a VMWARE environment, what have you? This gives me a lot more options of OK? I I can trust, I basically can remove the other areas I have to worry about and I just have to

12:52

trust what happens within that VM virtual machine. Now at the bottom is where we get, I'd say super jiggy where we get down to intel SGX, secure guard extensions and SGX is a enclave. So it protect puts your protected data or your basically your your data or your apps into a protected zone on the server. And at that, at that point, I don't have to

13:18

worry about anything over there. I I don't trust them, I don't trust them and I don't care because all I have to worry about is my confidential data that's in that enclave. So as you're doing, as we're looking at the future with like pure and we're looking at storage. This is a huge conversation on what is my

13:37

security, you know, what, what's, what's my security posture? What am I going to do? How much will I secure? How much do I need to secure? And how much do I need to trust? And who do I need to trust? Now, let's pop over to um one of my like absolute favorite words and that is

13:56

optimization optimization. Um I was out two weeks ago having lunch with um a health care vice president. We were sitting down chatting and I said, hey, what do you, what do you think of the word optimization? And he goes, I love optimization and I'm like sweet. What does that mean to you?

14:13

And he goes optimization for me is that patient care is the best it can be. And I'm like, OK sweet. What about your cloud spins? He's like, no, I don't care about that. I'm like, but do you though? And so as you know, it's funny is that optimization has many meanings. That's my point.

14:29

And that for us optimization is how do we make sure that the workloads are running at the most optimal both perf and cost? OK. So here's some examples we talk about um load balancing web tier on connections with engine. There's that example right there with intel crypto acceleration. So 1.52 X faster.

14:51

Um and you can see down the list of the different type of workloads. We care about workloads, we care about will that workload perform the best? Now, the optimizations when we say optimizations, we are looking at the whole stack. So if we look at on Prem, I look at knobs in the hardware, I look at knobs in the software, I look at what's the CPU architecture and the

15:16

features that are available accelerator. And I'm building a recipe for that specific workload where this will run the best. Any questions on this before I go, because I'm gonna go, I'm gonna go one click in deeper. OK. All right. So what, what's um this is our latest announcement on optimizations

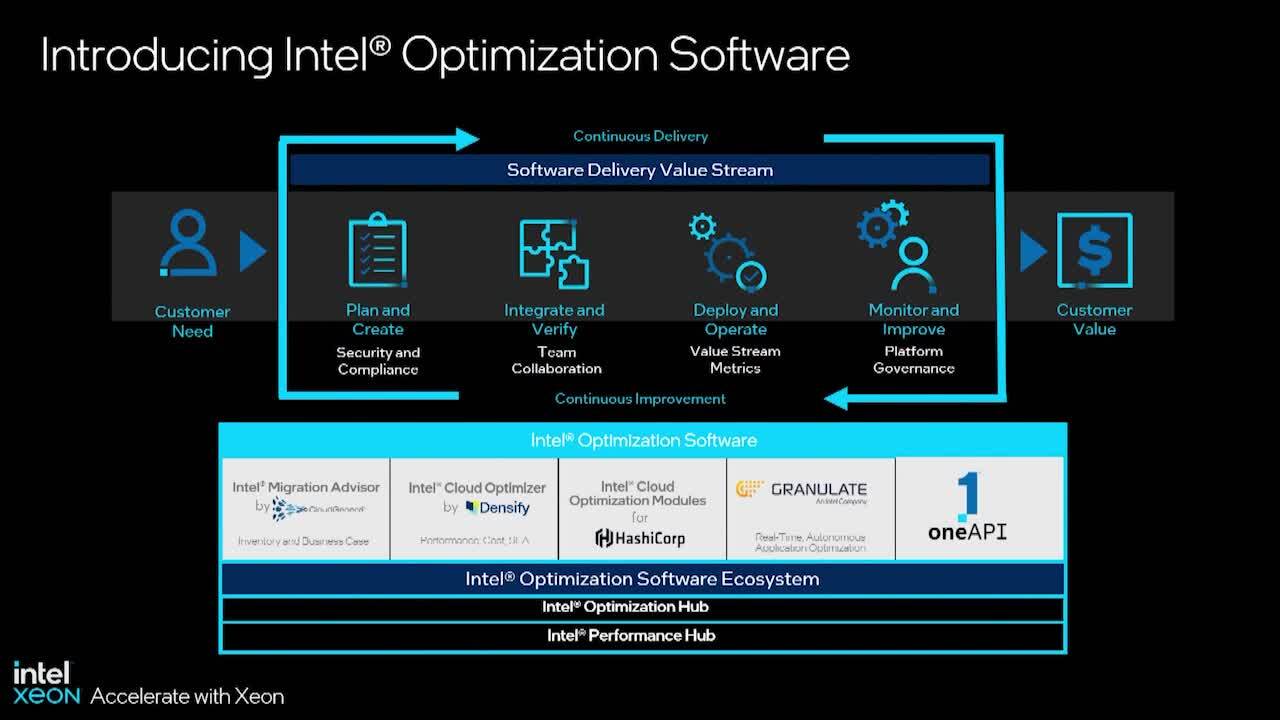

15:37

on what we're doing from a software and from a capability. So across the top is just that, that continuous delivery system of you're building software, you are then you know, you're planning it, you're verifying it, you're deploying it, you're monitoring it and you get value out of it. Whatever that offering is to be where Intel has taken a big step is on this optimization

15:58

software package here. And we've got a couple of partnerships and um we really like granulate so much, we decided to buy them. So that was what Brian was talking about earlier. We really liked what they did. I didn't believe that they could do it. I didn't believe that they worked and I was

16:12

testing them in the lab. We saw that it worked and that was me and my VP said let's buy them and we got them. So and you can quote me on that. Yes, but um that let's go through them. We, for each of the modes above we have a different optimization ability. So migration advisor is you're moving from on

16:31

prem to cloud and you want to say what, what do I have assessment and where do I need to be? And this gets into, you know, things like um instance type CS P type like which one? What's your, what's your storage back end? Look like? What storage do you want? What's your network configuration? It goes through the full stack.

16:52

Um The next one down, the next one across is intel clouds by dens. Um Have you ever heard of Dens before? Yeah, a little bit. Yeah. So density is um uh it's just to me, it's in that rock star category of dens I will plug in and look at your utilization of your CS P account and be able to tell you,

17:14

hey, guess what? You should be on this, right? We can save you a million bucks if you move to this instance site or if you move to this um past service over your or you know, you, you this I asked service here's some of the past equivalences that could be available. What, what's great is that? Um 223 months ago,

17:35

um Their CTO Andrew was talking to me and said, hey, you know, we just launched and he goes, and I, you know, I was like, OK, what do you, what do you got? And he goes, we just launched our ability to take from cost optimization back to infrastructure changes. So if you're familiar with Dens today, they give you a report and you choose if you want to

17:53

execute that report. So from a fin standpoint, the fin ops, so the operator can say, hey, developer, please do this or they can say, hey, we're gonna go physically, you know, we're gonna go do it manually where dens has taken. The next step is they have that auto back and it will actually go change your infrastructure for you. So when we were kidding about Terminator a

18:13

moment ago, that was the sky net reference as we are truly moving to machine to machine communication where changes are happening dynamically and no people will be involved in that decision. Frightening a little bit epically cool. Yes, Hashi Corp has he been using Hashi today form form Os S Terraform. OK. So Hashi Corp is a infrastructure as code.

18:41

So think of it as the easy button when you're writing architecture that instead of writing a script like a bash script or I'm gonna do a pearl or you know, whatever you're gonna roll out your architecture, you're gonna put a file in Hashi Corp allows you to basically modulate your architecture to make it faster, to set up, destroy and change So we've been working with Hashi to take all of our

19:05

optimizations for things like my sequel. Is anybody using my sequel In The Room By Chance or Post Grass or Maria DB or MS sequel or any database? I got you. OK. So we're taking our optimizations that are, that are basically we've cooked up that run better on into architecture.

19:23

We've baked it in as IAC modules so that customers and partners can basically say, hey, I want to deploy a my sequel and yeah, I'd like it to run 1.5 X faster or I'd like it to save me 30%. Grab the module, they're able to leverage the module. So what's fascinating to me is these two partners right here have come together to

19:47

create that Skynet 2.0 that I was talking about. So basically, you can connect the dots between cost optimization and performance awareness to actually change the infrastructure on the fly. Now, you need to choose how you want on the fly, scares a lot of people because does that mean immediate or does it mean next reboot? Does that mean maintenance window your call? But it allows that loop.

20:10

So on the other software is granule. Has anybody heard of Granite before? Because I just said it to Chris. So Granulate um Granite has an interesting, an interesting angle on this. They've looked at specific applications like Java and they've looked at how

20:31

is the application communicating with the kernel layer and how do they basically reroute traffic to make the application perform faster? So you could do more with less, right. So I can actually pack more or I can reduce my instant size. Um It's kind of, I think of it as like a, uh, a wicked smart scheduler

20:54

slash intuitive, you know, kind of an autonomous agent that continues to tune as you are operating again. They were so cool. We decided to buy them. It was just so intriguing that how, how they did it and how um you know how they're doing the communication and then one api all of our uh one API are our tool sets around open vino um Our performance tools all baked

21:17

into one API at the bottom. We've got two other innovations that are, that one's come out and one's about to come out. The into optimization hub is live. I'm going to show you that in just a moment and the performance hub is next up on the radar for us. So I was gonna ask a question if you think you

21:40

see. Definitely because Yeah, yeah. No, just, well, the range we're seeing from a cost optimization is 30 to 50%. OK? And that's using some combination with one like dens or I'm using on the way in um granulate, you know,

22:09

we've seen where customers are walking in and saving like a million bucks over a year with it giving basically the right density and getting that, that speed up. Um Some of those are quoted um on Intel's website. Um I'm trying to think of one very specifically and I will, he'll come back, it'll probably come to be in just a moment.

22:29

Yeah, please gradually. I was a um, yeah, uh expose profiles. Um It basically, you put it in to basically protect mode or a to activate the agent. And so over the course of about nine months, we saved them enough money where we absorb the

23:28

cost of their growth if they were going to double, because they were about 1300 data break instances over many clusters. So we basically absorbed that cost of that growth for them to now double that environment and still remain cost neutral. So that's a, that's a real example. It takes some time but and it depends on workload. So we really have to understand what that

23:49

workload is and we'll get to a point where we basically tell you this is, there's enough savings here to move forward or we'll say there's, there's probably not enough. And so that's been a really good success. So part of part of that, thank you, part of that capability is we picked up a tool called G profiler and that's what Brian was talking about.

24:11

G profiler is out on github, you could pull it down today. Um It will do online and offline uh uh an awareness of what workloads you have and kicks you back with. Here's some things you should think about. Here's some of the performance things you should look at from a tuning standpoint and also what G profiler does, which I think is amazing is it says you're a good fit for

24:36

granulate the adaptive um autonomous application optimization. It'll say, yeah, that you're a good candidate or no, you're not. But by the way, here's all the other apps that you're running and here's some good performance items you can do. So it's kind of a, it's free.

24:53

That's me. That was like one of the best parts we got is that was free to consumers. I use that a lot where I profile an account where I go to a customer and say, hey, let's run this. They run it on one of their representative nodes. I grab back that data, look at it and I can give a quick awareness.

25:08

Um Vibe is excited about flame graphs if that means anything to anybody. I love flame graphs because it tells me where the spikes are at and kind of what's the pattern of the application. Um That's part of what G profiler provides very well. All right. So moving on and trust me, we're gonna have

25:26

some time for questions. If you have questions about any, you know any of our competitors, you can ask Brian, I will handle it. OK. All right. So last thing is this um just went live um this quarter it's called Intel optimization hub.

25:43

Um What it does is that we realized a couple of things. One, it is hard to find our optimizations. It's kind of a nightmare. It's not fun. Um Or we'll put it into a, a PDF file and let you go hunt 1000 pages in and see what you see, see what you find.

26:03

It's brutal. Um Not fun. Um There's some of us that are engineers that have to read those docs don't be like us, please. But we took, it took all the optimizations based on workloads and we put it into the optimization hub and it continues to grow week on week with additional optimizations, additional configurations.

26:24

Um But what you can do here is you can search for a given workload and say does intel have anything on that workload? It'll come back and it'll give you a couple of things. It will say, say it's, it's a yes, like my sequel is a yes. It'll say here is the benchmarking data on cost and performance.

26:42

Here is the recipe on how to do it. Here is the hashi module on how to run it if you want or if you want to poc it, here's an already done container that you can pull up and and execute that code on. So we're providing kind of all the ingredients and recipes. Say here you go, here's how we know it runs better.

27:00

You can, you know, implement from there like I said, we're continuing to update more in there if um and by the way, this opens up a door to our engineering team, which I think is great. Um You know, for folks that are both writing the optimizations and those that are writing the I A. So in my part of my non weekend, but nighttime I write modules with hashi and write code

27:23

snippets. So if you go to our repos, you'll see my name out there on, I write code of how to implement those things. All the code that me and my team do is all put up here as well. So you go in and say, hey, I wonder if they have anything on this, this design or this architecture, if it's there, we posted it and again,

27:39

we're continuing to add more there. I know there's feedback. Of course, you know, hey, you guys missed the beat here. You don't have that no problem. Just let us know we can get it out there pretty quick or um make sure that we're going after that specific workload.

27:54

So I I'm just, I'm excited because from a developer standpoint to me, this helps me out a lot. I think it helps the customers that I've met out a lot of how to make it easy for me to find stuff, make it easy for me to consume it and put it into practice and that's it. So we want to make a short sweet. I guess at the end of this, let's say is um and I'm gonna queue it up to Brian here just a

28:13

moment to answer that pure question. Um We care about optimizations, we care about our customers and our partners having the best costs per cost, having the best performance and making sure that we're articulated in a way that's easy to consume as well as being loud enough that you know, that we're still here because we are actively engaged in just about every community out there um To help with,

28:40

how do I accelerate it? How do I make it better? Um I don't know if you saw it but with Generative ID A I, um we have a partnership with Boston Consulting Group BC G that just came out around. How do you do Giga I in a gated environment where you can publish and grab your data for your company and do it and we have an architecture for it.

29:00

That to me is super exciting chat GP T in a secure private area. I want that all day long. I don't want my power points to go out into the world though. No, thank you. Right. So it's, how do you fence it? How do you get it? So, Brian, I think it'd be good since we didn't really answer it is why are we here at a pure

29:16

event? And what have you done? Because you've been working on it? With what do you, what do you, what do we bring in? Yeah, so a couple of things. So obviously in the arrays is obviously pure, I mean uh is intel. So we've got CP us that sit inside these pure arrays, but at the same time,

29:31

they're leveraging some of the accelerators and some of the features to basically do pre imposed processing uh data to de Dulic compression. And so that's a necessary need to, to kind of drive some of the efficiency that peer is talking about. Um other things that are important to intel, that kind of connect some of the dots back to is what we call Intel accelerators.

29:52

And so we have infinity programs inside Intel that drive a connection between an accelerator and a solution. So kind of connecting the dots and we're, we're running go to market programs both with VM Ware. Um it leveraging pure but also extending to like Coity Combat that are leveraging those accelerators to perform data protection.

30:14

And so when we think of trying to connect the dots, it's it's real because we actually we actually are are basically driving what pure is basically saying they're leveraging or their partner is leveraging like crypto and I or A BX 5 12 to basically drive that solution. And so there's an affinity that then a seller could leverage inside Intel to basically recognize, you know, that's how they get paid. And so making that connection back to what's

30:41

real for Intel is making that connection otherwise it's somewhat vaporware. And so we, we, we really need to, it needs to really have some meat behind it. And that's what, that's what Chris Johnson and I are working on kind of the go to market strategies and connecting those dots with those various partners. But it all, it all centers around Intel pure and their partners.

30:58

So hopefully that helps.