The new benchmark for AI and HPC storage. Nothing else comes close.

No more starving, idle GPUs. Write speeds scale up to 50% of reads performance.*

*In a single namespace. Based on Pure Storage performance testing with controlled hardware environment.

Highest performance density, ever. Optimize power and cooling costs for energy-hungry GPUs.

Handles the most diverse, multi-modal AI workloads with ease.

Supports powerful AI factories with unparalleled efficiency.

Provides seamless scalability for AI, at any level.

Deploy in half the time, with minimum complexity.

AI demands instant, frictionless, unlimited access to data. FlashBlade//EXA delivers.

Outdated storage is stifling AI innovation. Set it free.

Existing systems can’t keep up with metadata bottlenecks, causing inefficiencies and costly delays.

Traditional storage lacks parallelism, requiring ongoing optimizations to feed massive GPU clusters, at full speed.

AI and HPC infrastructure needs are dynamic, requiring seamless growth of data and metadata, but outdated storage systems are inherently complex and inflexible.

A pioneer in data storage platforms for AI.

Innovating in AI since 2018.

Pure Storage FlashBlade//S™ is the trusted foundation for enterprise AI. With NVIDIA GPUDirect® Storage support, it eliminates bottlenecks, delivering direct-to-GPU data access at unmatched speeds. Backed by NVIDIA certifications—including NVIDIA DGX BasePOD™, NVIDIA DGX SuperPOD™, and more—FlashBlade//S is proven to accelerate AI at scale. Building on the success of FlashBlade//S, we’re pushing the boundaries further by optimizing metadata performance and scaling massively to meet the demands of large scale AI and HPC.

From multimodal data management to high-concurrency workloads, FlashBlade//EXA powers the future of AI-driven innovation.

The cutting-edge architecture built for extreme AI-scale.

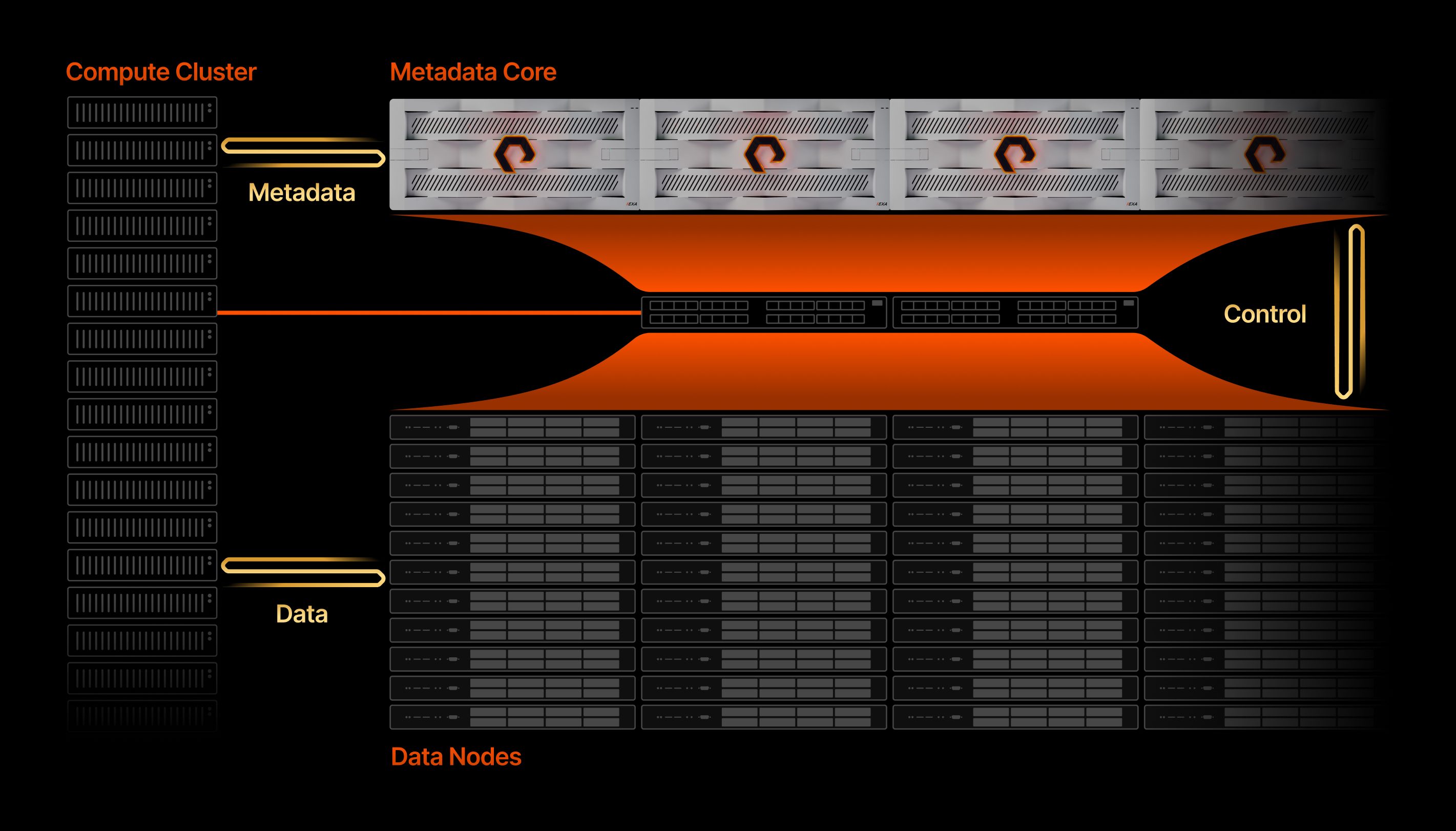

FlashBlade//EXA Architecture

Eliminate GPU idle time and accelerate data throughput with massive, low-latency metadata operations and cost-efficient data scaling. Seamlessly expand storage to achieve the highest levels of aggregate read performance in a single namespace. Train, tune, and infer the most powerful AI models without delays—ensuring workflows stay efficient, and results arrive faster.

Scale data and metadata effortlessly, reducing complexity and manual tuning—even at exabyte scale. With a disaggregated data and metadata architecture without limitations, FlashBlade//EXA keeps storage responsive to the ever-growing demands of AI.

Stay ahead of the AI revolution with a highly configurable architecture that evolves with next-generation AI and HPC workloads. Seamlessly integrate with leading AI ecosystems like NVIDIA while supporting diverse, multimodal data sets and AI models in the most demanding environments —including high-frequency trading, drug discovery, and advanced driver assistance systems (ADAS).

Dive deeper into the innovative architecture.

FlashBlade//EXA Metadata Core Specifications

FlashBlade//EXA Data Nodes Specifications

Leadership perspectives. The bold vision.