00:49

Yep. Alright. Hello everyone. Good afternoon. Uh Happy lunch. So today we'll be talking about flash a block data protection portfolio and we'll be having a interview with our customer Jared from net gain and try to understand how they're

01:13

using our priority cloud snap for their cost effective cloud data protection strategy. All right. So do you need business continuity with no downtime? I'm guessing we all do. So for that, we have active cluster, active cluster provides zero RPO zero

01:42

RT O replication with the use case of solving business continuity. It is symmetric in nature. What that means is the read and write are to the same volume on either sides of the data center. They are active, active, the transparent fail over feature allows you to do non

02:06

disruptive resynchronization and also provides you non disruptive fail overs. And as that goes with all our features, no boltons, no additional licenses, no hardware. It comes out of priority operating system. Do you need cross geography, disaster recovery with simplified fail over? We do have a solution for that and that is active.

02:40

Dr active Dr provides low RPO and near zero RPO and low RT O data protection for disaster recovery. And as you can see, it works virtually across any distances across continents. We have customers leveraging it from Ireland at one data center in Ireland and the other in India. That's a long way for your business con

03:10

continuity requirements. And the RPO it provides is sub 30 seconds. And as you can see, the consistent simplicity of design is maintained in active DR as well, similar to active cluster with three simple commands. You can test your fail overs on a weekend without any down time and orchestrate your

03:38

fail overs as well. And also to support you during actual downtime or system outage. Now, do you need periodic and robust backup? We have asynchronous replication to serve that need. This technology allows you to configure your RP which suits your business needs.

04:10

This solution is for near term recovery, asynchronous application. As you can see supports multitude of topographies, one to many, many to one and a notable topography that customers our customers use is fan in you have multiple source array using asynchronous replication fanning in to one target CAA or even the newly launched

04:43

E flash array. This allows you to consolidate your secondary storage into one area. And as it as it goes without saying, asynchronous application uses our proprietary snapshot technology and helps you have point in time, consistent copies of your snapshot all of this configurable.

05:11

No. Do you need cost effective cloud archival solution? Yes, we have purity cloud snap, purity cloud snap. Sorry about that. Pity cloud snap allows you to use snapshots for near term or long term

05:36

snapshot archival in the cloud. These are some of the use cases how pity cloud snap can be leveraged. As I said, you can tier your data to the cloud for short term and long term backup and you can also use purity cloud snap for doing disaster recovery the same way as active Dr but in the fashion of doing it to the cloud

06:08

data reuse in the cloud. A thing to note here is that Pr cloud snap sends meta data along with the snapshot data to the cloud. So what that means is that you can re spin your work workloads using the cloud based snapshots. Leverage used by CBS are another offering cloud block storage

06:35

and also use it for analytics and Deb testing in the cloud. And it also uses our underlying snapshot technology which are fast and space saving. We'll learn more when Jarett talks to us about how the space saving feature has helped them in their cost saving data protection strategy.

07:04

All right, enough of me talking. Now, let me invite with the drum rolls when I could my colleague and from net gain to talk to us more about his experience with PR D cloud snap. Hello. OK. Can we fix the mic?

07:32

Can everybody hear me now? Awesome. So, Jared, thanks a lot for coming. All over from your location to Vegas. I hope you're enjoying Vegas so far. So good. All right, let's have a seat. Let's talk to our audience. So before we begin,

07:47

thanks for giving a brief overview. I think the whole purpose of this session was really to give you an overview of all the data protection capabilities that we offer today. Everything that we showed is available out of the box. There is no extra hardware or licensing required and we have made these features like we've really put in a lot of heart and soul in making these features competitive.

08:09

So it's not that if it's included, it's not good. These are really competitive features. If you compare them with what's out there, you would find them to be very, very valuable. Uh If you have any of these needs like mentioned that do you need, do you need dr that these are worth exploring and just checking them out?

08:26

If you have your se a in touch, you can go and ask about them about these features to them as well and all these functionalities, a lot of our customers are using, we pretty much have a huge attached rate for our data protection portfolio. So quite mature, all the industries customers across the world use them with that. Jared. Jared is one of the customers who is using one

08:50

of the functionalities cloud snap. And today he's going to talk about their use case and their experience with it. Sure. All right. So thanks for coming. Would you please share with everybody uh a little bit about net gain. What does net gain do and what does your team offer at net?

09:10

Sure. Uh Like we said, my name is um I'm senior director, director of innovation for net technology. Um What our company does is manage hosting and services for medical, legal and financial uh verticals. We primarily partner with end clients. Um Those would be the clinics, the hospitals, um the the tax preparing offices,

09:34

the wealth management offices and bring all their applications into one of our data centers or of the public clouds um and work with them to serve that back to them primarily via desktop um manage desktop or managed hybrid environment. Um Our scope and responsibility is that of what an internal it shop and an enterprise would be where we help desk uh system operations, development, infrastructure,

09:58

security back up. Um and, and security services. Awesome. And I think you've been in business for like 15 years or something. Um I think it's 25. The, the company's been in business for 25. I've been with the organization for 16 years. Um I started in 2007 myself and uh your objective with your organization is to

10:21

stay competitive from a price point as well. So you can offer your services at a really competitive rates to your end clients. Yeah, we deal in a highly specialized environment and a highly regulated space. But there's this constant, there's an ever growing amount of data and size of these environments. And you're trying to offset that cost to the end clients as much as possible by bringing

10:42

innovative solutions being more effective with what you provision um being able to hit your slas and goals that you've promised to them at the lowest possible cost um to ensure you can keep that. So I think in your industry being cost effective is one of the key differentiators for offering services to your clients. It is it is they tend to be specialty in boutique, they,

11:04

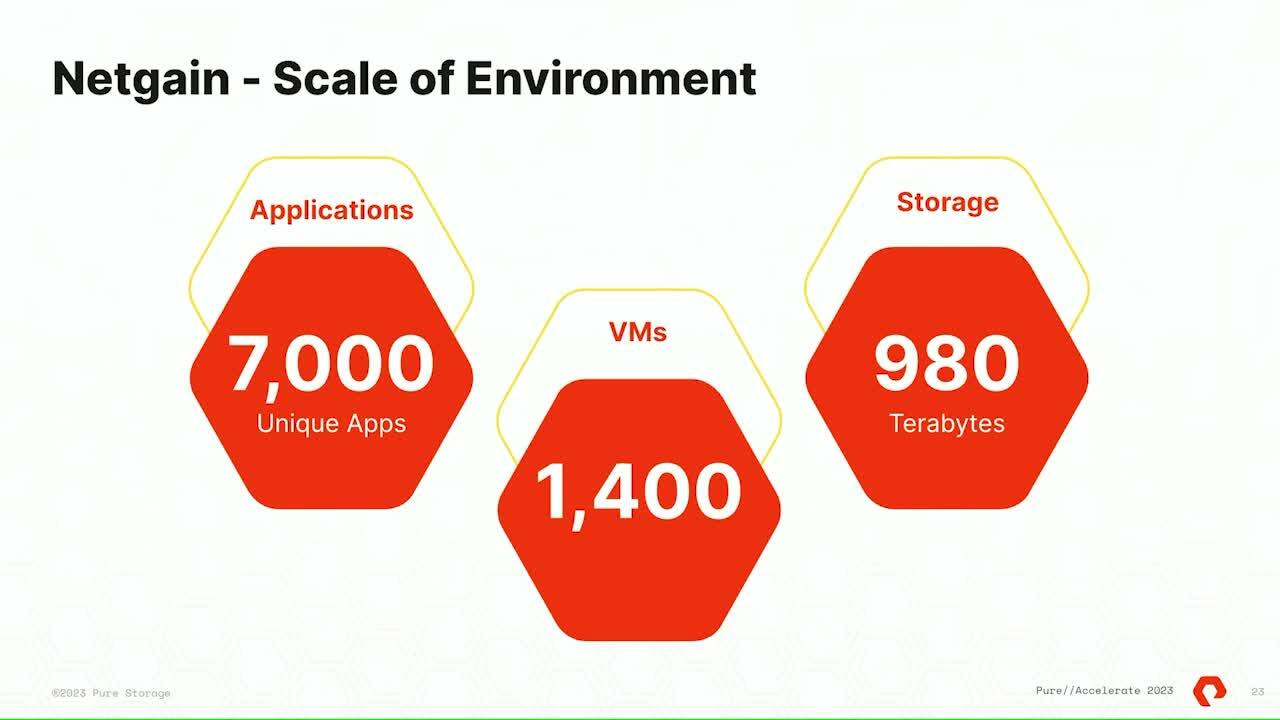

they have their needs um but you need to, you need to offset that cost for them. Awesome. So with that, tell us a little bit about the environment that you manage. What's the scale of that environment? Sure. Net game manages thousands of ems across the public and private clouds in in our private data centers which represent about half of our

11:25

footprint um is about 1400 to 1500 V MS that changes day to day. So that's always an estimate for us. Um But it's about 1500 VM workloads serving tens of thousands of end users um uh in three primary data centers. And it's important because I was amazed that how many people manage all that infrastructure. So our virtualization storage and backup team

11:53

for that entire environment, including the public cloud footprint is two individuals today. Plus me. Wow. And do you think it's a driving factor in your decision making that you want to choose something which is cost effective yet simple so that your team can manage it? Yeah. Um It's a big deal for us to be effective um

12:16

with the amount of employee time spent on any individual thing. And that started our transition to flash and pure in 2014 as we started adopted in our data center. Um At that time, a huge amount of time was spent in performance troubleshooting architecture optimization, storage infrastructure optimization. All of that kind of went away with our

12:36

transition to flash and continues to get better as we utilize more of the built in future sets. That's impressive with that vision, you know, making sure that you are able to manage all that environment with just a very small team and run such a lead shop. Yeah, we, we try to be as lean as possible. Um Like I said, I started with the organization a while ago. I was our only storage and virtualization

13:00

administrator uh 15 years ago. So, all right now let's talk about uh the dr requirements that you have that kind of lean you towards evaluating and then selecting cloud snap even as a possibility and a solution. Sure. Um So we have a need to meet um certain slas with our clients on the frequencies and the the storage location for both the R copies and backup copies um for our organization, they need to hit a certain daily

13:31

frequency and they need to be stored for a certain length of time for us. It's depending on vertical, either 13 weeks or 52 weeks as part of the dr strategy of the portion we're talking today. And I think I was really interest rate to the loan that besides cost effectiveness, cyber security insurance and those things play into your

13:57

decision making as well. Yeah, each of our, both us as an organization and each of our clients have certain regulations that they have to, to tune themselves to and respond to. And in addition to that, um they all have their own cyber cyber insurance policies, both us as an organization, them individually um like everyone in the,

14:17

the industry does. So there is a lot of a testament we have to do um on any individual um uh client's environment to prove to them, things are backed up or dr protected. Um That things are encrypted, they're encrypted everywhere all the time. Um And so we have to test the, basically each of those hundreds and hundreds of clients

14:39

individual audit and your customers who are health care and financial customers. They also have a very specific need that they discuss with you about off prem Dr I think, yes. Um each of them are slightly different but we have a need to be out of geographic region for the dr copies. Those have to be geographically dispersed from all the individual data centers um that we're

15:04

in. So that was a major point when we designed for it that it had to transit. I see. So you wanted to not increase your data center footprint, you wanted to decrease it, right? Excuse me too much, you know, party last night.

15:23

Uh So you want to decrease your footprint, you also wanted to make sure that whatever solution you choose is cost effective in terms of not a lot of um software add on cost. And also that it shouldn't be the case that when you start backing up to the cloud, it takes three or four X the capacity on the cloud. Yeah, that was a big deal for us. We had, we were in the middle,

15:49

we really evaluated cloud snap when we were in the middle of a data center consolidation effort. We were in a couple of older facilities. We're trying to transition to new facilities as well as collapse a couple. Um And in doing so, we were trying to stay as as efficient as possible and the dr and backup infrastructure can be a very significant footprint.

16:10

Um If you are using an ancillary system to move that data, normally you have to have local landing zones, you have to have workers or data movers to move that which takes a compute footprint and then you have to transit it to its final location um which is still useful in some cases um to achieve certain objectives, but we were going into a very tight footprint. Um And so we had actually done the evaluation,

16:33

we had read the release notes and went, this looks interesting and had implemented before our sales engineer knew we were doing so. Um and have continued to roll forward. Um We had such a good experience early on that we continued to do so. So we went from about 35 to 40 racks of total footprint. Um We're delivering those um 1700 or so VM

16:56

workloads in about four or four racks worth of, of capacity today. Awesome. So space savings uh cost savings, I think those are some of the advantages. So let's see, what are the business benefits that you have realized so far? Sure. So we've managed to hit all of our slas to the clients.

17:15

We've managed to shrink our footprint and stay within that footprint even as organic growth within the customer base and the customer base themselves continue to grow. Um We've managed to attest to all their cyber security requirements and needs and responses on that side. And we've been able to successfully restore and utilize that offload target to meet those needs. Like any internal it shop,

17:41

we do consistent backup and restores for our clients. There's always I deleted the PDF. I had an application vendor come and do an install and it didn't go the way they expected that sort of stuff. So we do on average 50 restorers per month for those needs and a large portion of them come from cloud snap and we've been able to consistently hit those requirements every time.

18:05

And I think you mentioned that you've been able to save some hundreds of thousands of dollars. So uh without sharing exact details, would you just want to share how that kind of happens if you use something like? Yeah, I mean, it primarily the savings came in the fact that we didn't need additional software. So we didn't need the maintenance and support

18:26

to cover, um uh you know, near a petabyte of primary storage space. Um uh in backup and software licensing for that scope, we still use backup and uh backup software and in certain scenarios get to more complex S A but we're able to reduce the scope of those requirements. We're able to save the footprint and power and we're able to not um have to bring in hardware um to support that.

18:51

Of course, because if you're using a backup software which is not clouds, then you'll have to have the hardware which you were referring to as the staging for it and then licensing around that software as well. Correct. And all that's avoided, the arrays directly will hit an S3 bucket at the end point. Um And so there is no intermediary, you just point the array at the bucket that goes,

19:13

that brings up a good question in my mind that our customers like yourself are usually worried that if I enable this kind of replication function on the array itself, wouldn't that take up any kind of performance away from my array? No, we've seen zero impact uh functionally, it works like snap on the array, just like snaps on the pure arrays don't impact the performance,

19:37

neither does this at all. Um We push hundreds of thousands of ops to single V MD K workloads in our environment and haven't seen a variation at all? Great. All right, can we uh learn a little bit about your experience with cloud snap in general? You know, how has your experience using the functionality, the feature set?

20:00

Sure. I mean, it's the, the best thing I can say about it is how simple it is. Um It's one of those things, um the ability, the, the ability to hit all of our goals and have it'd be incredibly easy to implement. Um is, is a testament uh to itself um realistically.

20:24

So we enabled it based on nothing other than public documentation. Um And my engineer spending an hour um realistically, all you do is put in the API N point, your private and public key and let it go. That is the entirety of the configuration. Other than that, it's the same as snapshots. You just put up a schedule and tell it to go to

20:45

the destination um that you configured in that set up step. Um That is really all there is to it. And how much data are you backing up today? So source side, it's about 900 or so terabytes. Um as it lands on the destination, you get all the and retention on that far side or about 1.1 petabytes or. So that is on the cloud side of the target when you take that all into account and how much uh

21:11

how many like V MS are protected with that? All of them, that entire 1700 footprint we blank at the data center. Um So they're all at least protected by car. And what would be your uh our, our deal like SL A that you believe is decent. Um We don't publish an RT O as part of our mean, but realistically, the restorers are incredibly quick.

21:34

So we're usually able to do restore within the hour in almost all scenarios for us. Um The fact that the, the array, the of the array uses its local D du um table when doing a restoration. So it is only pulling back the blocks that are different. If it's on the array, it won't have to re pull that data down. So receiving even relatively large volumes,

22:00

our average volumes are about 16 terabytes. Um and they'll range up to 50 plus terabytes in individual ones. And it really doesn't matter if the change rate shows within our reasonable scope, it will pull it back really quick because it doesn't pull anything. The array already has to, it.

22:20

That actually brings us to the conclusion. But we had an interesting conversation during lunch with that gentleman talking about ransomware use case. And I found that to be super exciting that how do you deal with ransomware with the cloud snap functionality? Yeah. Like any environment where there's a shared responsibility model with the end clients, there's always a risk,

22:42

they do something that will endanger themselves and you're responsible for helping them out of that. Um And in that way, we've, we've absolutely had to do pretty large scale recoveries on behalf of those, those clients making those decisions. Um The fact again, the fact that is everywhere in this case meant that we were able to pull

23:00

back hundreds of ems in hours um within, you know, a couple of hours and have them re promoted. Um And the fact that D du is used globally in the arrays meant we were able to, we were able to pull back near petabyte of data onto the array and not have to add a single disc to it because it was d duped with what was already there. We were able to promote all the copies,

23:22

do the data extractions because in some cases, you were trying to do the data mergers um with the existing data sets depending on the scope of their impact. Um and do that all without having to purchase a single extra dollar in storage was kind of amazing to us and to be able to do that and maintain that for days or weeks and get that information to the relevant authorities and the cyber insurance providers and all that.

23:44

We had time to think and to do it properly without impacting array of performance or without running out of storage space. Awesome. Now, the most important question, what's your plan for the evening? Uh Probably food and sleep? All right. So, thanks a lot, Jared. Uh We are open for questions if you have any

24:04

questions with Jared, but thanks a lot for coming over here.