Regardless of how a system is constructed, it is inevitable that failures will occur. High availability storage systems need to be designed to continue to serve accurate data despite failures. Recovery from failures also needs to be rapid and non-disruptive, two additional requirements driving storage system design in today’s business climate. Running two identical systems in parallel was an early approach to achieving high availability, but a variety of innovations over the last two or three decades are now able to meet high availability requirements in far more cost-effective ways. Some of these include dual-ported storage devices, hot pluggable components, multi-pathing with transparent failover, N+1 designs, stateless storage controllers, more granular fault isolation, broader data distribution, and evolution towards more software-defined availability management.

Architectural complexity is a key detractor from system-level reliability. Given a target combination of performance and capacity, the system with fewer components will be simpler, more reliable, easier to deploy and manage, and will likely consume less energy and floor space. Contrasting dual with multi-controller system architectures can help to illuminate this point. The contention that multi-controller systems deliver higher availability (the primary claim that vendors offer) is based on the argument that a multi-controller system can sustain more simultaneous controller failures while still continuing to operate. While theoretically that is true, multi-controller systems require more components, are more complex and pose many more fault modes that must be thoroughly tested with each new release.

The experience of many enterprises is that the higher reliability of these more complex multi-controller systems in practice is in fact not true. Dual controller systems have fewer components, are less complex, and have far fewer fault modes that must be tested with new releases. In fact, one interesting innovation is to use the same "failover" process for both planned upgrades and failures to simplify storage operating system code and make that common process better tested and more reliable than the different processes that most storage systems use to handle failures and upgrades. As long as a dual controller system delivers the required performance and availability in practice, it will be a simpler, more reliable system with fewer components and very likely more comprehensively tested failure behaviors. Simpler, more reliable systems mean less kit needs to be shipped (driving lower shipping, energy and floor space costs), less unnecessary redundancy to meet availability SLAs (driving lower system costs), and fewer service visits.

Storage managers need to determine the levels of resiliency they require--component, system, rack, pod, data centre, power grid, etc. For each level, the goal is to deploy a storage system that could continue to serve accurate data despite a failure in any one of these levels. Many storage vendors provide system architectures that use software to transparently sustain and recover from failures in individual components like storage devices, controllers, network cards, power supplies, fans and cables. Software features like snapshots and replication give customers the tools they need to address larger fault domains like enclosures, entire systems, racks, pods, data centers, and even power grids. Systems designed for easier serviceability simplify any maintenance operations required when components do need to be replaced and/or upgraded.

Designs that reduce human factors during recovery have a positive impact on overall resiliency. Humans are the least reliable "component" in a system, and less human involvement means less opportunity that a technician will issue the wrong command, pull the wrong device, or jostle or remove the wrong cable.

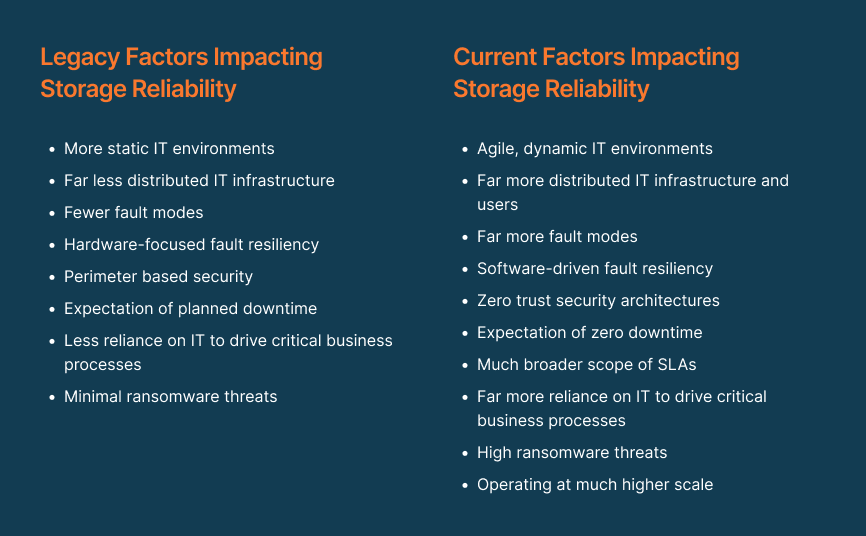

While initially focused more on hardware redundancies, the storage industry's approach to resiliency has changed in two ways. First, we have moved away from a focus on system-level availability to one that centers on service availability. System-level availability is still a critical input, but modern software architectures provide options to ensure the availability of various services, regardless of whether those are compute, storage or application based, that offer significantly more agility. And second, fault detection, isolation and recovery has become much more granular, software-based and much faster than in the past, providing more agility than was possible with less cost-efficient hardware-based approaches.

With data growing at 30% to 40% per year1 and most enterprises dealing with at least multi-PB data sets, storage infrastructures tend to have many more devices and are larger than they have been in the past. A system with more components can be less reliable, and vendors building large storage systems have to ensure they have the resiliency to meet higher availability requirements when operating at scale. Flash-based storage devices demonstrate both better device-level reliability and higher densities than hard disk drives (HDDs), and they can transfer data much faster to reduce data rebuild times (a factor which is generally a concern as enterprises consider moving to larger device sizes). The ability to use larger storage device sizes without undue impacts on performance and recovery time results in systems with fewer components and less supporting infrastructure (controllers, enclosures, power supplies, fans, switching infrastructure, etc.) that have a lower manufacturing carbon dioxide equivalent (CO2e), lower shipping costs, use less energy, rack and floor space during production operation, and generate less e-waste. While highly resilient storage system architectures can be built using HDDs, it can be far simpler to build them using larger flash-based storage devices that are both more reliable and have far faster data rebuild times.